Cross Validation Examples¶

Figure 8.14

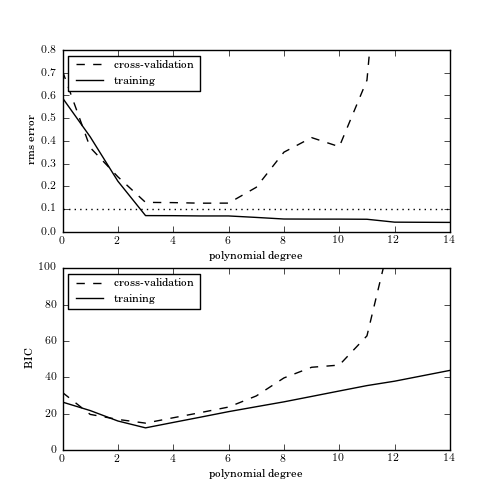

The top panel shows the root-mean-square (rms) training error and validation error for our toy model (eq. 8.75) as a function of the polynomial degree d. The horizontal dotted line indicates the level of intrinsic scatter in the data. Models with polyno- mial degree from 3 to 5 minimize the validation rms error. The bottom panel shows the Bayesian information criterion (BIC) for the training and cross-validation subsamples. According to the BIC, a degree-3 polynomial gives the best fit to this data set.

WARNING: RankWarning: Polyfit may be poorly conditioned [numpy.lib.polynomial]

WARNING: RankWarning: Polyfit may be poorly conditioned [numpy.lib.polynomial]

WARNING: RankWarning: Polyfit may be poorly conditioned [numpy.lib.polynomial]

# Author: Jake VanderPlas

# License: BSD

# The figure produced by this code is published in the textbook

# "Statistics, Data Mining, and Machine Learning in Astronomy" (2013)

# For more information, see http://astroML.github.com

# To report a bug or issue, use the following forum:

# https://groups.google.com/forum/#!forum/astroml-general

import numpy as np

from matplotlib import pyplot as plt

from matplotlib import ticker

from matplotlib.patches import FancyArrow

#----------------------------------------------------------------------

# This function adjusts matplotlib settings for a uniform feel in the textbook.

# Note that with usetex=True, fonts are rendered with LaTeX. This may

# result in an error if LaTeX is not installed on your system. In that case,

# you can set usetex to False.

from astroML.plotting import setup_text_plots

setup_text_plots(fontsize=8, usetex=True)

#------------------------------------------------------------

# Define our functional form

def func(x, dy=0.1):

return np.random.normal(np.sin(x) * x, dy)

#------------------------------------------------------------

# select the (noisy) data

np.random.seed(0)

x = np.linspace(0, 3, 22)[1:-1]

dy = 0.1

y = func(x, dy)

#------------------------------------------------------------

# Select the cross-validation points

np.random.seed(1)

x_cv = 3 * np.random.random(20)

y_cv = func(x_cv)

x_fit = np.linspace(0, 3, 1000)

#------------------------------------------------------------

# Third figure: plot errors as a function of polynomial degree d

d = np.arange(0, 21)

training_err = np.zeros(d.shape)

crossval_err = np.zeros(d.shape)

fig = plt.figure(figsize=(5, 5))

for i in range(len(d)):

p = np.polyfit(x, y, d[i])

training_err[i] = np.sqrt(np.sum((np.polyval(p, x) - y) ** 2)

/ len(y))

crossval_err[i] = np.sqrt(np.sum((np.polyval(p, x_cv) - y_cv) ** 2)

/ len(y_cv))

BIC_train = np.sqrt(len(y)) * training_err / dy + d * np.log(len(y))

BIC_crossval = np.sqrt(len(y)) * crossval_err / dy + d * np.log(len(y))

ax = fig.add_subplot(211)

ax.plot(d, crossval_err, '--k', label='cross-validation')

ax.plot(d, training_err, '-k', label='training')

ax.plot(d, 0.1 * np.ones(d.shape), ':k')

ax.set_xlim(0, 14)

ax.set_ylim(0, 0.8)

ax.set_xlabel('polynomial degree')

ax.set_ylabel('rms error')

ax.legend(loc=2)

ax = fig.add_subplot(212)

ax.plot(d, BIC_crossval, '--k', label='cross-validation')

ax.plot(d, BIC_train, '-k', label='training')

ax.set_xlim(0, 14)

ax.set_ylim(0, 100)

ax.legend(loc=2)

ax.set_xlabel('polynomial degree')

ax.set_ylabel('BIC')

plt.show()